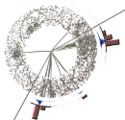

Caltech, the University of Michigan, and the University of Victoria joined forces to demonstrate LHC data movement at a rate of 100Gbps during the SuperComputing 2011 Conference. A peak of 151 Gbps was already demonstrated by Caltech, FNAL, Michigan and SLAC during the SC05 Conference in Seattle using multiple 10GE connections.

For these transfers a single 100GE connection was terminated in the booth using the Brocade 100GE router. A similar router was used in Univ of Victoria. The University of Michigan used its MiLR infrastructure, augmented with FSP-3000 DWDM equipment from ADVA and switches from Cisco to connect to a single Dell Server using the latest Mellanox 40GE NIC. ESnet carried the 40GE connection between Starlight, in Chicago, and SC11 in Seattle where it was merged into the 100GE wave.

Caltech's FDT Data transfer application was used to exchange data from storage to storage and demonstrate extremely high end-to-end bandwidth for sharing large LHC datasets in near realtime. This was a preview of a possible future LHC site configuration relying on a few powerful servers to enable low latency, large dataset sharing and access.

Michigan's connection to SC11 was enabled by contributions from ADVA, Cisco, Mellanox, and ColorChip who all provided equipment and expertise.

We go from boxes on the floor to a powerful, compact Tier-2 equivalent in 2 days. The two racks pictured here contain switches from Brocade, Force10 and Cisco with a total of 2x100G ports, 48 40G ports, 72 10G ports. There are 12 servers with 40G (5 PCIe Gen3, 7 Gen2), 19 servers with 10G. There are a total of 472 processor-cores including 72 Intel “Sandy Bridge”. Storage included 48 120G SSDs and the local SATA disk space totals 324 TB. All of this must be configured to connect to remote resources to demonstrate “future” capabilities for LHC data transfer.

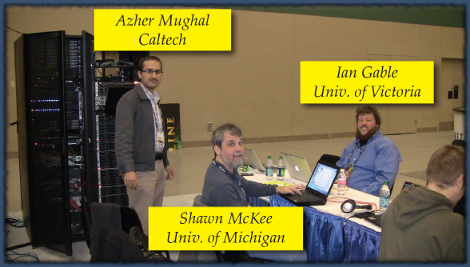

Having a successful SuperComputing (SC) demonstration takes a team effort. Lots of people with different skills in computing, networking, storage, operating systems and protocols need to work closely together to enable “cutting-edge” hardware to inter-operate. Many of the challenges we face are related to using the newest hardware and untested bios and firmware releases. We have a small time-window to come up to speed on what is new and how to best make it work in the context of our demonstrations.

There are hundreds of booths from vendors, labs and universities. The LHC demonstrations are typically done from the Caltech booth but often involve others (Internet2, ESnet, FNAL , BNL and others).

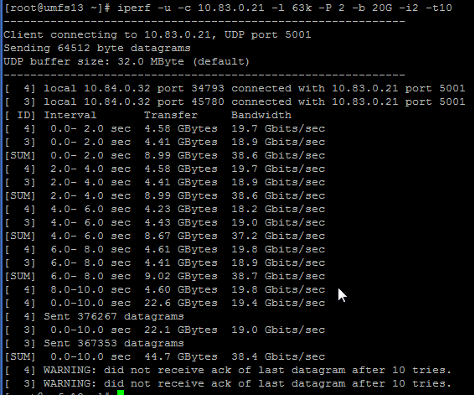

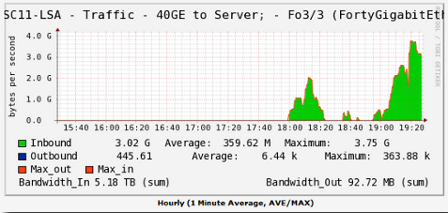

We were able to demo 40Gb/s data rates between our site and the conference in Seattle. The demonstration system was provided by Dell and used a Mellanox 40GE NIC connected to a Cisco 6506-E.

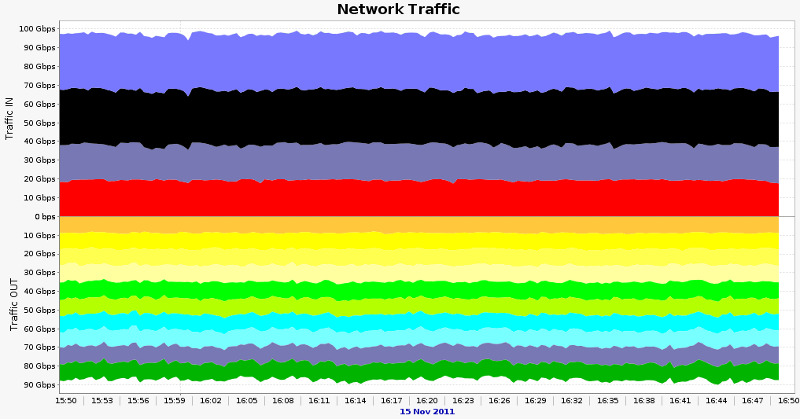

The results here are from 10 Servers at UVic exchanging data with 7 servers at SC11. Note that traffic flowed into SC11 at ~97 Gbps while simultaneously flowing out around 87 Gbps.

Servers were Dell R710s at UVic and a mix of Dell R510s, Supermicro Servers and 2 pre-release Dell Servers on the SC11 sides.