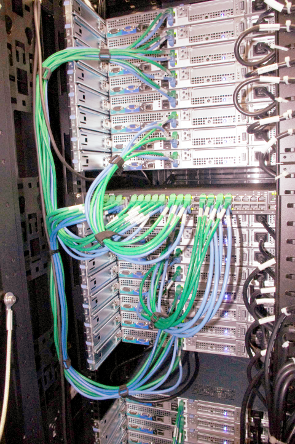

ATLAS Great Lakes Tier 2 provides computing and storage capacity for US ATLAS physicists running ATLAS simulations and data analysis. We currently provide more than 2,500 multi-core job slots (12,000 cores) and over 7 Petabytes of storage capacity interfaced to the Open Science Grid. Our cluster systems run Scientific Linux 7. Our job scheduling system is Condor and we utilize dCache, Lustre, AFS, and NFS as storage systems.

ATLAS data movement is structured into Tiers. Tier 1 sites are generally large national institutions. The Tier 1 for the entire US is Brookhaven National Lab.

Tier 1 sites have all data necessary to serve their associated Tier 2 sites. From there subsets of the data move to different Tier 2 sites such as ours. Tier 2 sites "subscribe" to datasets from the Tier 1 that they need to host at their sites. Most common datasets are replicated at several Tier 2 sites so jobs can easily run at any site.

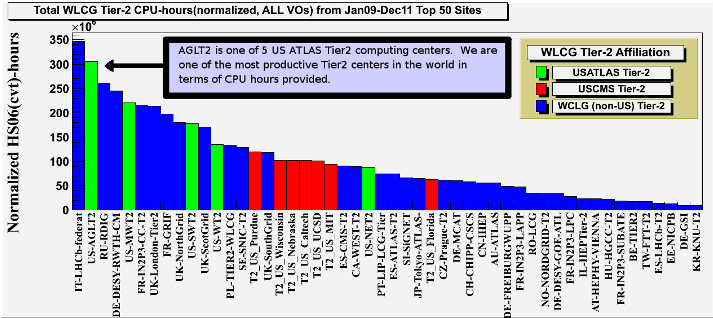

AGLT2 is one of the top ATLAS Tier 2 sites worldwide in terms of CPU hours provided.

In any given 24 hour period we average more than 8000 completed ATLAS jobs

We transfer an average of 1-2TB of data per day to and from other sites